This assignment is worth 25 points and has two parts.

Implement either

the minimax algorithm (including alpha-beta pruning)

or Monte Carlo tree search (MCTS).

First

clone (or update your existing copy of) the ai_1

repository. Look in the src/minimax

subdirectory, which has source files for the classes described in

this section.

Write a general-purpose implementation that can search any game that implements the following interface:

// S = state type, A = action type

public interface AbstractGame<S, A> {

S initialState(int seed);

S clone(S state);

int player(S state); // which player moves next: 1 (maximizing) or 2 (minimizing)

List<A> actions(S state); // available moves in this state

void apply(S state, A action); // apply action to state

boolean isDone(S state); // true if game has finished

double outcome(S state); // 1000.0 = player 1 wins, 0.0 = draw, -1000.0 = player 2 wins

double PLAYER_1_WIN = 1000.0, PLAYER_2_WIN = -1000.0, DRAW = 0.0;

}Your minimax or MCTS class will implement this interface:

interface Strategy<S, A> {

A action(S state);

}A Strategy represents a strategy for playing a game. It is essentially a function which, given a state, decides what action the current player should take.

You must implement your algorithm so that it will work even if moves do not strictly alternate. For example, in some games player 1 might move twice, then player 2 might move twice, and so on. (Warlight is like this, since on each player's turn they have two moves: one to place armies and one to attack/transfer.)

For minimax, you will need this additional interface:

public interface HeuristicGame<S, A> extends AbstractGame<S, A> {

double evaluate(S state);

}

evaluate is a

heuristic evaluation function

that can estimate the expected outcome of the game at any state.

You will need to use

this evaluation function when you cut off the search because it has

reached its maximum depth.

You should implement the following class in

Minimax.java:

class Minimax<S, A> implements Strategy<S, A> {

public Minimax(HeuristicGame<S, A> game, int limit) {

…

}

}

In the constructor, limit

represents a search depth.

For example, if limit is

2, your action method

should consider all possible sequences of 2 actions from the given

state. If limit

is 0, there is no depth

limit and you should search the entire game tree.

Your implementation should include alpha-beta pruning.

Don't forget to clone the game state before applying an action as you descend the game tree recursively.

At a leaf node representing a completed game, it's best to call the outcome() method to find the node's value. (In theory evaluate() should return the same value for a completed game, but that is not guaranteed. For example, in some of the sample games in the ai_1 repository, evaluate() always returns a dummy value.)

Your minimax implementation should adjust its computed minimax values so that a win in fewer moves is favored over a win that will come later. (And, equivalently, a loss that happens later is not as bad as a loss that occurs sooner.) This may significantly improve its performance against weaker players. That's because if all wins or losses are considered equal, then if minimax discovers that it is in a position in which will it will lose if the opponent plays perfectly, it will consider all moves to be equally bad, and is likely to make a foolish move that allows the opponent to win immediately. But if it favors moves that will put off the loss until later, that gives the opponent (who may not see so far ahead) an opportunity to make a move that is less than perfect, which salvages the game.

For debugging, I recommend adding a field "int debugLevel" to your Minimax class, with value 0 by default. If debugLevel > 0, you can print out all moves and their minimax values up to the search depth indicated by debugLevel. This will be useful not only for debugging your Minimax class itself, but also for seeing why your agent makes the moves it does with any given heuristic function.

For Monte Carlo tree search, if you can find the fourth edition of our textbook (Russell & Norvig), then I recommend that you follow the description of MCTS in section 5.4. (This algorithm is not discussed in the third edition.) Otherwise, you may attempt to follow this survey article:

Cameron Browne et al, "A Survey of Monte Carlo Tree Search Methods" (2012)

The article is a bit dense, so if you have questions about it then let me know.

Implement the following class in Mcts.java:

// Monte Carlo tree search

class Mcts<S, A> implements Strategy<S, A> {

public Mcts(AbstractGame<S, A> game, Strategy<S, A> base, int limit) {

…

}

}

In the Mcts constructor:

base is a strategy implementing

the playout

policy to be used for

simulations.

limit

represents the number of

iterations to peform. Each iteration includes a node expansion and a

playout.

Be sure to clone the game state in two situations: (a) before you apply an action while expanding a node, and also (b) before you begin a simulation from a newly created node.

The outcome() method in the AbstractGame interface returns a value that is +1000.0 for a win by player 1, 0.0 for a draw, and -1000.0 for a win by player 2. For Monte Carlo tree search to work properly, you will need to scale this into a value where 1.0 is a win and 0.0 is a loss. You might do that like this:

double o = game.outcome(s);

// convert to 1.0 = player 1 win, 0.5 = draw, 0.0 = player 2 win

double w = (o - AbstractGame.PLAYER_2_WIN) /

(AbstractGame.PLAYER_1_WIN – AbstractGame.PLAYER_2_WIN);

For debugging, I recommend adding a field "boolean debug" to your class, with value false by default. If this field is true, then each time that MCTS chooses an action you could print out the number of wins and number of playouts in each node below the root. This will be very helpful for seeing why your agent behaves the way it does.

You could also add a method reportStats() that reports statistics aggregated over the entire lifetime of a Mcts object. The following might be especially useful for performance optimization:

average iteration time in ms

average number of simulation steps per iteration (i.e. the average playout length)

average time per simulation step in μs

The ai_1 repository contains several sample games that implement the interfaces above: TrivialGame, Tic-Tac-Toe, and Connect Four. It would be wise to test your minimax or MCTS implementation on these games before tackling Warlight in the next section.

TrivialGame is, well, trivial. In this game, there are only two moves. The first player chooses a number from 1 to 3, then the second player chooses a number from 1 to 3. Then the game ends; the player with the higher number wins. If the numbers are the same, the game is a draw. Although this game is extremely simple, it may be useful as a first test of your algorithm's functionality.

Of course you are already familiar with Tic-Tac-Toe, and probably with Connect Four as well.

All of the sample games implement the HeuristicGame interface, so they will work with minimax. However, for TrivialGame and TicTacToe the evaluate() function just returns a dummy value. For Connect Four, the evaluate() function computes a heuristic by scanning the board and looking for groups of adjacent stones that contain discs of the same color.

For each

of the sample games, there is a command-line script (trivial,

tictactoe,

connect_four)

that launches it. Run the script with no arguments to see a list of

options and available strategies. Specify one strategy on the command

line to play as a human against that strategy, or two strategies to

have them play each other. Use the -sim option to run a series of

simulated games. Using these options, you should be able to debug

your implementation. You may also find it interesting to see how it

does against various strategies as you vary its parameters.

The following strategies are available for all the sample games:

random – plays randomly

minimax:<depth> - runs minimax with the given search depth, or 0 for unlimited depth

mcts:<iterations>/<base-strategy> - Monte Carlo tree search with the given number of iterations and base strategy

Of course, the 'minimax' and 'mcts' strategies will only work if you implement them, i.e. write the Minimax or Mcts classes. :)

For each game, there are one or more additional strategies available. For TrivialGame:

perfect – a perfect player (always chooses 3)

For TicTacToe:

basic – plays a move that wins immediately if possible, otherwise blocks an immediate win by the other player if possible, otherwise makes a random move

For Connect Four:

basic – plays a move that wins immediately if possible, otherwise blocks an immediate win by the other player if possible, otherwise makes a random move

heuristic – plays a move that wins immediately if possible, otherwise blocks an immediate win by the other player if possible, otherwise makes the move that gives the largest immediate gain in the evaluation heuristic

Here are a few examples showing how to launch these sample games:

$ ./trivial minimax:0 random -sim 100

Run 100 games of TrivialGame in which minimax (with no depth limit) plays versus a random strategy.

$ ./tictactoe mcts:100/random basic -sim 100 -v

Run 100 games of Tic-Tac-Toe in which MCTS (with 100 iterations, and using 'random' as the base strategy) plays against 'basic'. Print verbose output, i.e. show the random seed and outcome of each game.

$ ./tictactoe mcts:100/random basic -seed 22

Watch MCTS (with 100 iterations, and using 'random' as the base strategy) play Tic-Tic-Toe interactively against 'basic', with seed 22.

$ ./connect_four heuristic

Play Connect Four interactively against 'heuristic'.

If your implementation of minimax or MCTS is correct, here is how you should do against these games:

In TrivialGame, you will defeat a random strategy 67% of the time, and will always draw against the perfect strategy.

In Tic-Tac-Toe, you should win at least 70-80% of games versus 'basic' as player 1. As player 2, you should win at least 15% of games versus 'basic' and should never lose. With MCTS you might need a few hundred iterations to achieve these win rates, and might need at least 500-600 iterations in order to never lose a game.

In Connect Four, minimax with a search depth of 4 should win against 'heuristic' at least 80% of the time. MCTS seems very strong in Connect Four: I've found that it wins about 95% of the time against 'heuristic' with only 200 iterations using 'heuristic' as the base stategy.

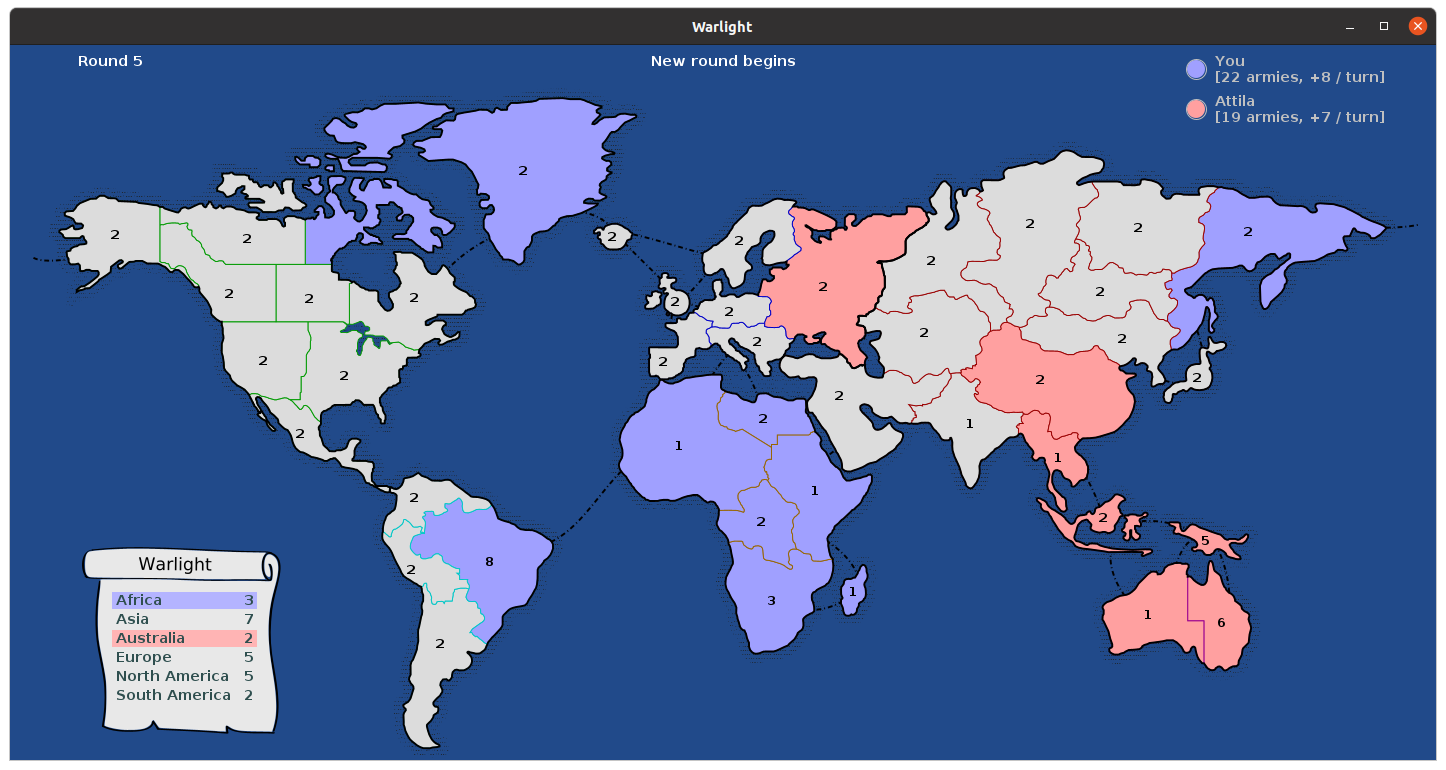

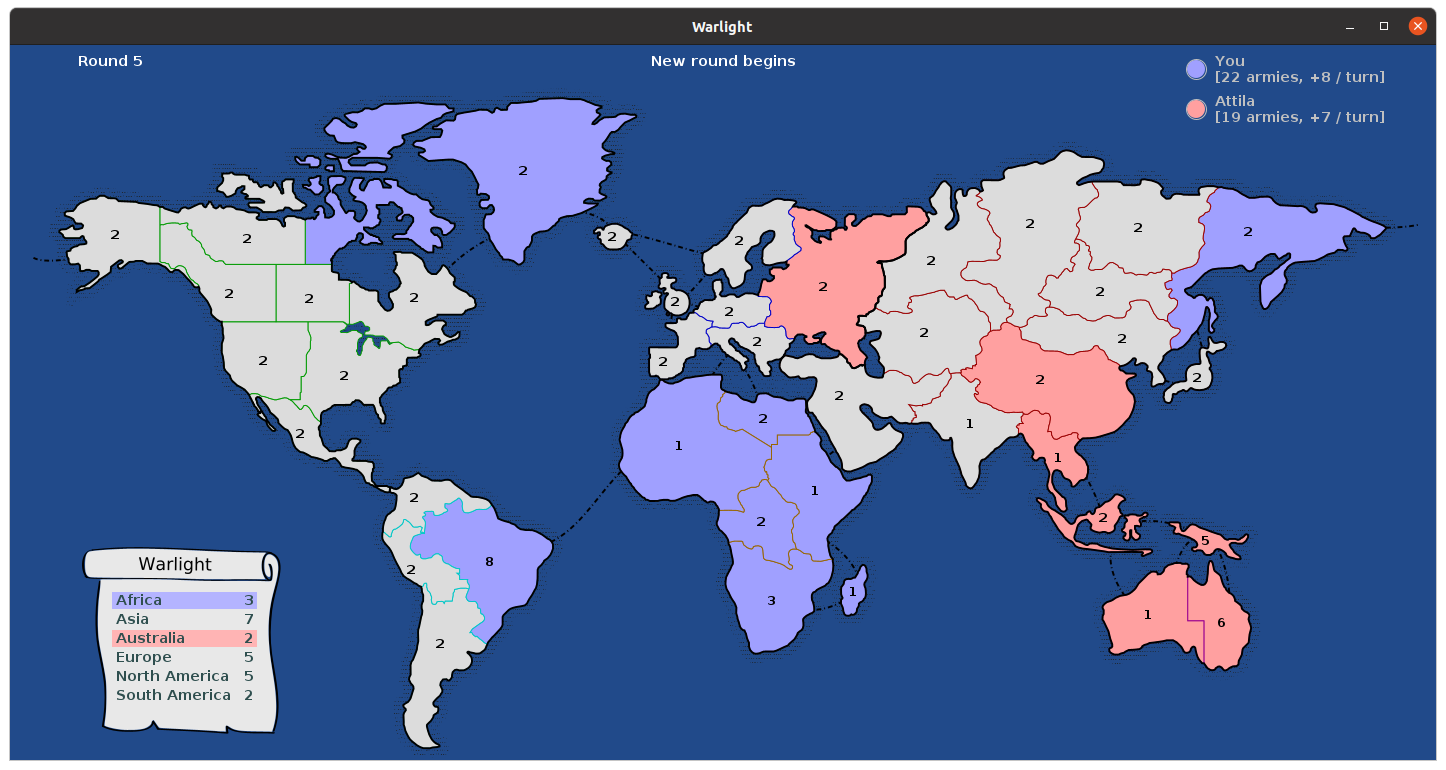

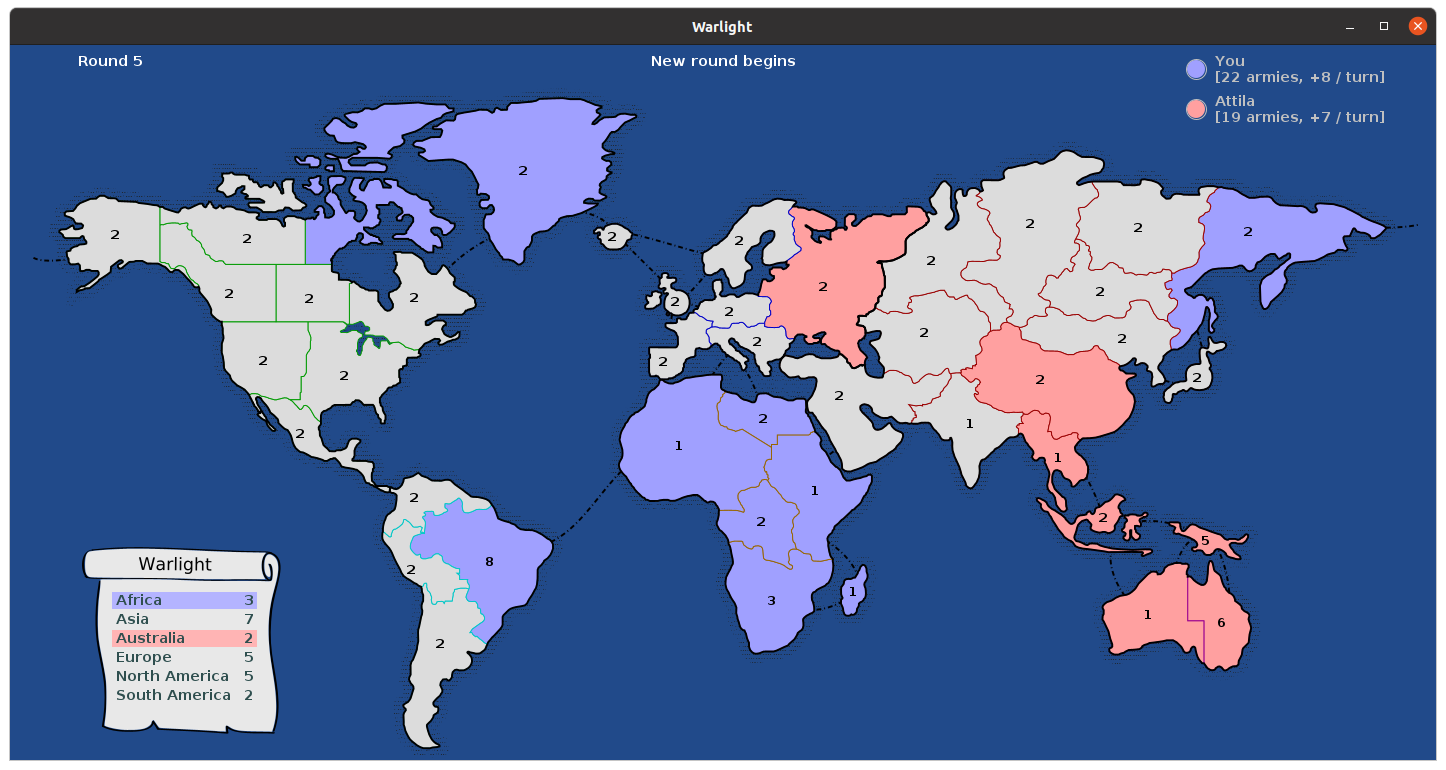

Download the code from the Warlight repository on GitHub. Read the game rules on the GitHub page.

Write an agent that plays Warlight. You may use your minimax or MCTS implementation from the previous section, or another approach if you prefer.

Assuming you will use minimax or MCTS, you will need to do the following:

Write a class WarlightGame

implementing HeuristicGame<S, A>

(for minimax) or AbstractGame<S, A> (for MCTS).

Use the Warlight

classes Game and Move

as your state type S and action type A. Your class might look

something like this:

class WarlightGame implements HeuristicGame<Game, Move> {

@Override

public Game initialState(int seed) {

return new Game(null);

}

...

}

In the actions() method, you

cannot return all moves

that are possible in a given state, since that would make the

effective branching factor far too high. Instead, you should choose

a limited set of possible moves

at least somewhat strategically.

If you are using minimax, you will need to invent an evaluation function for Warlight board states.

If you are using MCTS, you will need a base strategy to be used for simulations, i.e. rollouts. You could use a strategy that plays randomly, or the strategy played by one of the existing agents, or could invent your own strategy for this purpose.

Write an agent MyAgent

that uses your Minimax

or Mcts

class to play Warlight.

To receive full credit for this assignment, your agent will need to be able to win 75% of games versus Napoleon, who is the strongest of the built-in agents. Specifically, ReCodEx will run 50 games of your agent versus Napoleon, and you will need to win at least 38 of these games for full credit. If you win at least 33 games (i.e. over 65%), ReCodEx will award you partial credit for the assignment.

There is a time limit of 5 minutes for the 50 games on ReCodEx. That might sound like a lot, but a typical Warlight game might have 50 turns, in each of which your agent will make 2 moves, so in the 50 games you will probably make on the order of 5,000 moves. That means that your agent will probably need to make its moves in about 50 ms on average in order to not exceed the time limit. That should still be enough for several hundred iterations of Monte Carlo tree search if you have a fast base strategy.

When you write your agent, it will be easiest

to implement the Agent interface directly rather than

inheriting from the abstract class AgentBase.

The set of actions that you return from actions() will have a large impact on your agent's performance. As a starting point for your experimentation, I recommend returning 6-8 random actions from this method, heavily weighted toward actions that are likely to be strong according to your own heuristics. It is probably best to favor attacks over transfers, to favor attacks on the enemy over attacks on neutral territories, and to never generate an action that includes an attack that is likely to fail. In your weighted choice of actions, you may not need to consider strategic questions such as which continent to attack next; in an ideal world, your algorithm will be able to figure that out automatically.

When considering army placement actions, you can greatly reduce the set of possibilities by only generating actions that place all armies on a single territory. An agent that places armies this way can still be pretty strong.

If you are writing a heuristic evaluation function for minimax, think about the following point. Consider two positions A and B. In position A, I have 5 out of the 6 regions of Africa. In region B, I have 5 regions that are scattered around the planet, with one on each continent. Surely A is a stronger position. Your evaluation function should reflect that, i.e. favor position A over B. This will give minimax an incentive to build up armies on a particular continent, which is strategically important. If your evaulation function only rewards continents that are fully owned, it will probably perform poorly because your minimax search will often reach its depth limit before a continent is actually captured.

For MCTS, I recommend a base strategy that is simple and fast. However, a completely random base strategy will probably perform very poorly. Ideally you would like MCTS to be able to perform each simulation step (i.e. each move in a playout) in about 1 – 2 μs. This will allow you to perform several hundred iterations in less than 50 ms.

Getting minimax or MCTS to perform well may take some time; there are many variables you can adjust. Be prepared to do some patient experimentation. If you get stuck, let me know.

All entries received before the deadline for this assignment will automatically entered into a tournament. There will be three tournament categories:

In one category, the winner will be the agent with the highest win rate versus Napoleon+3, which is Napoleon strengthened with 3 extra armies on each starting territory. You can run your agent versus this formidable opponent like this:

$ ./warlight MyAgent Napoleon+3

In the second category, I will run a series of games in which each of your agents will play against every other agent several times. The winner will be the agent with the highest win rate, averaged over all the games.

In the third category, all of your agents will play each other in a series of 4-player games on a larger map. In each game, the first-place winner will win 3 points, second place will win 2 points and third place will win 1 point. The winner will be the agent with the highest average score per game. This category provides an extra challenge since the standard minimax algorithm (and Monte Carlo tree search in its usual form) only work for 2 players, so if your agent uses one of these algorithms then you will need to adapt it in some way if you wish to compete in this category.

The map for this category is a map of Western Europe with 98 territories. To run a game on this map, type

$ ./warlight -map western_europe

For a dramatic finish, the 4 top agents will play in one final championship game, the winner of which will win 3 extra ReCodEx points.

In the tournament games there will be no time limit per move. However, only agents that are fast enough to complete 50 games vs. Napoleon within the 5-minute time limit on ReCodEx are eligible for the tournament. To be eligible for tournament category 3, your agent must also pass the warlight_multi test on ReCodEx, which plays three 4-player games including your agent and must finish within a time limit of 60 seconds.