This assignment is worth 20 points and has two parts.

Implement uniform-cost search in Java.

To begin, first ensure

that you have Java version 11 or higher. Clone

the ai_1 repository,

which contains files for this course's assignments. Look in the

ucs/src subdirectory, which has source files for the

classes described in this section.

You will write a general-purpose implementation that can search any problem that implements this interface:

// S = state type, A = action type

interface Problem<S, A> {

S initialState();

List<A> actions(S state);

S result(S state, A action);

boolean isGoal(S state);

double cost(S state, A action);

}

Build your implementation in Ucs.java, in this class:

// uniform-cost search

class Ucs<S, A> {

public static <S, A> Solution<S, A> search(Problem<S, A> prob) {

… your implementation here …

}

}

The search() method should return a Solution<S,

A> if a solution was found, otherwise null. Solution<S,

A> contains these fields:

class Solution<S, A> {

public List<A> actions; // series of actions from start state to goal state

public S goalState; // goal state that was reached

public double pathCost; // total cost from start state to goal

…

} Your search method could be used like this, for example:

Problem<Integer, Integer> p = new MyPuzzle(); Solution<Integer, Integer> result = Ucs.search(p);

The ucs/src subdirectory includes several Problem

instances that you can use for testing:

Empty.java – an problem with a

single state, which is also the goal

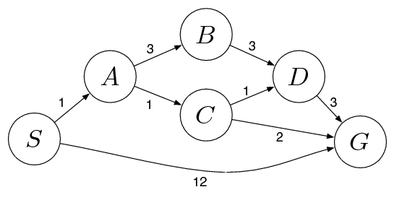

Graph.java – a small

undirected graph

Line.java – a one-dimensional

state search problem

Grid.java – a two-dimensional

state search problem

It would be wise to ensure that your uniform-cost search implementation works on these problems before attempting to use it in your Ms. Pac-Man agent. Run the tests in UcsTest.main().

Our Java collections overview will be helpful.

Create a class Node<S, A>

that will hold nodes for the search.

Use a PriorityQueue<Node<S,

A>> to store the frontier.

Make your Node class implement

Comparable<Node<S,

A>> and give it a compareTo method that

compares nodes by path cost.

To store the explored set, use a data

structure that lets you check you quickly check whether a state is

in the set, e.g. a HashSet<S>.

(You may assume that the state class (S) in any Problem instance

correctly implements the getHash() method, so you may use states as

hash keys.)

Your algorithm will sometimes discover a new path to a state that is already in the frontier. There are several possible approaches you could take in this situation:

Check whether the new path is cheaper than

the existing path. If it is, you want to record it, but you cannot

simply update the fields of the existing frontier node, since

that will not change its position in the priority queue. Instead,

you can remove the existing frontier node from the queue, and add a

new node representing the new and cheaper path. With this approach,

you will never have two nodes in the queue that represent the same

state. However, removing an existing node is potentially slow,

since the remove method of PriorityQueue

runs in O(N).

Check whether the new path is cheaper than

the existing path. If it is, then add a new node representing the

new and cheaper path, but leave the existing node in place in the

queue as well, to avoid the expensive remove

operation. With this approach, there may be multiple nodes in the

queue that have the same state. So whenever you remove a node from

the queue, you must check whether its state is already in the

explored set. If so, you have already handled that state, so

you should ignore the node.

Always add a new node representing the new path, even if it is more expensive than the existing path. With this approach, you will have more duplicate nodes in the queue than in approach (2). As in approach (2), when you remove a node from the queue you will need to check whether it has already been explored.

With

approach (1) or (2), you will need some way to quickly check whether

a given state is in the frontier so that you can find its existing

path cost. One way to do that is to use a HashMap<S,

Node<S>> to keep a map from states to frontier

nodes.

I recommend approach (2) or (3), since (1) will become slow as the frontier grows large.

In this part of the assignment, you will use uniform-cost search to play Ms. Pac-Man.

Download the Ms. Pac-Man Java code from the MsPacMan-vs-Ghosts-AI repository. When you visit that page on GitHub, be sure to read the entire page – it has lots of useful information about the game, including a link to the API documentation.

Write your agent in

PacMan-vs-Ghosts-Agents/src/MyPacMan.java, which

initially holds a dummy implementation.

I recommend the following basic approach. In your

agent code, create an instance of the Problem<S, A>

interface above and call Ucs.search() on every game

tick. Perform a uniform-cost search over the physical maze graph, not

the abstract tree of game states. (Be sure that you understand the

difference between these.)

In the API, each position in the maze is a node in the maze graph. The initial node for your search should be Ms. Pac-Man's current position. I recommend that every pellet be a goal node, unless it is close to a non-edible ghost. Edible ghosts also make great goal nodes. :)

When Ucs.search() returns a Solution,

the first action in the solution is the direction that Ms. Pac-Man

should go.

Assign costs to graph edges as necessary to avoid going along undesirable paths. Any edge that ends at a non-edible ghost should have a very high cost. You may also want to give high costs to edges that are close to ghost positions, or that move in a direction toward the ghosts. If you want to be smarter, consider not only where the ghosts are now, but where they are heading.

Remember that uniform-cost search (i.e. Dijkstra's algorithm) does not work for negative edge weights. So don't be tempted to assign negative weights to edges that lead to places you do want to go, such as power pellets – that won't work. A better approach is to make these be goal nodes.

The game runs at 25 frames per second, so your tick() method will need to return in under 40 ms. There are about 1,000 nodes in the graph, which is a small enough number that you can actually perform a uniform-cost search over this graph in this amount of time, as long as you don't do too much work in your path cost function. When you run your agent, if you see messages of the form

[SIMULATOR] PacMan is still thinking!

then your agent is sometimes failing to respond in 40 ms, and you will need to make it faster. Be cautious about using the getGhostPathDistance() function, which can eat up a lot of time (see the API notes on the GitHub page).

To gain insight into your agent's behavior, use the GameView.addPoints() method to draw the path that Ms. Pac-Man is currently following. You can generate this path from the list of actions in the Solution that you generate on each tick. You may even want to draw different edges of the path in different colors to indicate the cost (i.e. danger) along those edges.

ReCodEx will test your test your MyPacMan

agent by playing 50 games, each with a different random seed. Note

that in each game Ms. Pac-Man initially has 3 lives and may

potentially go through many different levels.

To score 10 points for this part of the assignment, your agent must score an average of at least 10,000 points per game. ReCodEx will give you partial credit for lower scores, but no credit for any agent that averages less than 5,000 points per game.

To run the testing process yourself, run the Evaluate class (in PacMan-vs-Ghosts-Agents/src/Evaluate.java). This will run 50 random games and report your agent's average score on these games.

All agents submitted before the soft deadline for this assignment will be entered into a tournament. Entries will be ranked by highest average score. In the tournament, I will evaluate agents by playing as many random games as necessary to ensure that the differences in average scores are statistically valid.